In the time of COVID-19, much of what transpires from the science world to the general public relates to the virus, and understandably so. But other domains, even within medical research, are still active — and as usual, there are tons of interesting (and heartening) stories out there that shouldn’t be lost in the furious activity of coronavirus coverage. This last week brought good news for several medical conditions as well as some innovations that could improve weather reporting and maybe save a few lives in Cambodia.

Ultrasound and AI promise better diagnosis of arrhythmia

Arrhythmia is a relatively common condition in which the heart beats at an abnormal rate, causing a variety of effects, including, potentially, death. Detecting it is done using an electrocardiogram, and while the technique is sound and widely used, it has its limitations: first, it relies heavily on an expert interpreting the signal, and second, even an expert’s diagnosis doesn’t give a good idea of what the issue looks like in that particular heart. Knowing exactly where the flaw is makes treatment much easier.

Ultrasound is used for internal imaging in lots of ways, but two recent studies establish it as perhaps the next major step in arrhythmia treatment. Researchers at Columbia University used a form of ultrasound monitoring called Electromechanical Wave Imaging to create 3D animations of the patient’s heart as it beat, which helped specialists predict 96% of arrhythmia locations compared with 71% when using the ECG. The two could be used together to provide a more accurate picture of the heart’s condition before undergoing treatment.

Another approach from Stanford applies deep learning techniques to ultrasound imagery and shows that an AI agent can recognize the parts of the heart and record the efficiency with which it is moving blood with accuracy comparable to experts. As with other medical imagery AIs, this isn’t about replacing a doctor but augmenting them; an automated system can help triage and prioritize effectively, suggest things the doctor might have missed or provide an impartial concurrence with their opinion. The code and data set of EchoNet are available for download and inspection.

One technology augmenting or supplanting another is always a delicate but hopeful time. Ultrasound isn’t exactly exotic, so perhaps it will be adopted sooner rather than later, as this appears to be largely an advance on the software side.

A new angle on macular degeneration

Another common condition that would be much easier to deal with if it could be reliably diagnosed earlier is macular degeneration. This process of cells in the retina degrading with age can lead to worsening vision and blindness and can be difficult to identify early on because the retina is so complex and difficult to access.

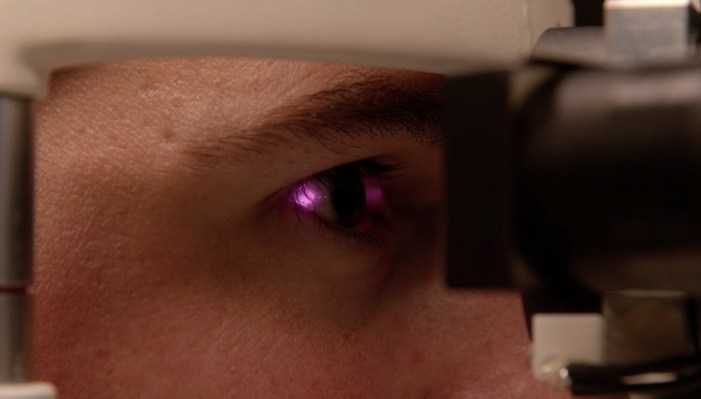

Scientists at the École polytechnique fédérale de Lausanne (EPFL, in Swtizerland) have come at the problem from a new angle — a diagonal one. Normally, and naturally, to image the eye one wants to look through the pupil or lens of the eye, because it’s transparent. But just as looking straight down onto a landscape makes it difficult to see the layers beneath, it is difficult to image deeper layers of the retina from straight on. The EPFL team instead illuminates the eye from an angle, through the sclera or white of the eye (you can see it in the top image).

This method lets them image the retina at a cellular level and identify potential problems even in healthy people who have reported no vision issues. Like the ability to detect any other disease before it manifests symptoms, this could be of huge benefit if employed on a large scale. That’s the plan, as you might expect, and the technology has already been spun off into a new company called EarlySight.

Computer vision helps the hunt for unexploded bombs

The legacy of war lasts decades, especially in countries without the means to undertake costly cleanup efforts for things like bombs and mines. Cambodia is one of those, and tens of thousands have died from the unexploded ordnance the country was peppered with during the Vietnam War.

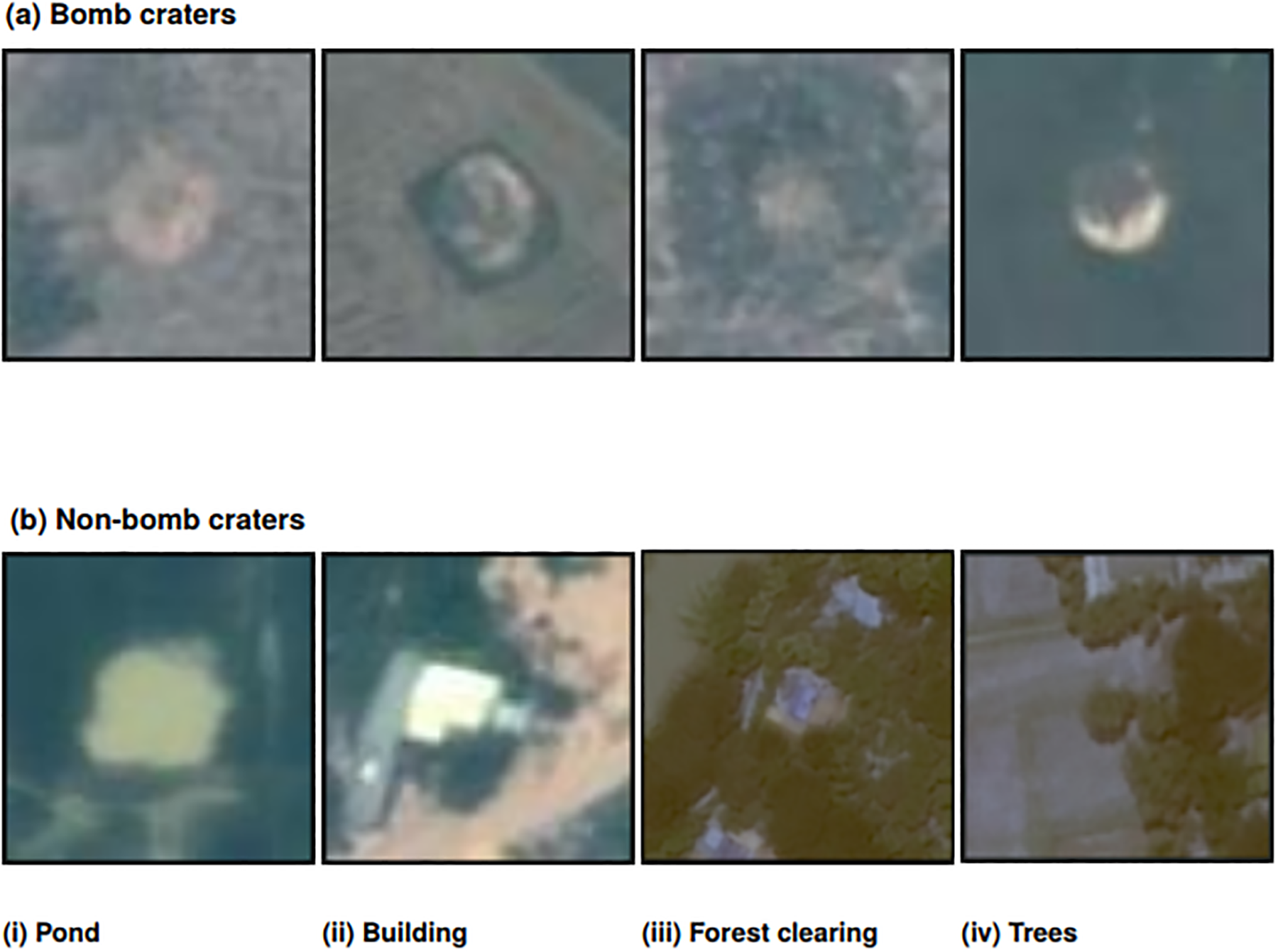

Ohio State University’s Erin Lin and Rongjun Qin, knowing that the scarce resources available need to be targeted effectively to save lives, began looking into identifying bomb craters from satellite imagery. They applied a computer vision algorithm originally designed for locating craters on other planets, then augmented it with another that’s more aware of how those craters change over time, with grass and erosion changing the shape and color.

The two-part algorithm first identified over a thousand potential craters, then revised that count down to around 200; a human checker going through the data identified 177, of which 152 had been correctly flagged by the algorithm. Obviously for life-and-death matters like this you want more than 85% accuracy, but this tool is meant to be part of a larger effort to direct resources. If records say 5,000 bombs were dropped in an area, and you can identify 2,800 craters with 85% accuracy, that makes for an estimate of 1,000-1,500 unexploded bombs.

It’s valuable information and achievable quickly and with little training — the rest is up to the people putting their lives on the line to save others from the violent folly of indiscriminate warfare.

Putting a bounce in your step

Running is a full-body exercise to be sure, but your calves are doing a lot of the work lifting your body. Another project out of Stanford aims to power up the spring-like motion of a runner’s leg. The setup looks a bit jury-rigged — ropes tied to pegs — but the results are apparently quite delightful.

The mechanism attaches to the lower leg and essentially aids in the part of the step where the runner pushes off with their toe, helping lift the body’s weight along with the calf muscles. The team found, to their surprise, that an actual spring mechanism didn’t work, requiring more energy from the runner, but by powering the spring-like motion they could achieve as much as a 15% increase in running efficiency.

Grad student (and runner) Delay Miller said the assist feels “really good,” noting that “powered assistance took off a lot of the energy burden of the calf muscles. It was very springy and very bouncy compared to normal running. When the device is providing that assistance, you feel like you could run forever.”

This type of assistive exoskeleton is still just a lab demonstration, but powered exos are already starting to help people with mobility issues accomplish tasks like sitting up from a chair or standing for long periods of time.

Rain’s coming… I can feel it in my neural nets

Predicting the weather is extremely difficult, so I feel for the meteorologists who constantly have to update their educated guesses at what’s going to happen in a system governed by chaos. Google may be able to take some of the pressure off them with a new “neural weather model” that’s essentially a formal educated guess maker.

Predicting the weather is extremely difficult, so I feel for the meteorologists who constantly have to update their educated guesses at what’s going to happen in a system governed by chaos. Google may be able to take some of the pressure off them with a new “neural weather model” that’s essentially a formal educated guess maker.

MetNet, as they call it, actually doesn’t know anything about how weather “works,” like high-pressure zones, cloud types and so on. Instead, it has just watched many hours of radar data to find patterns in how the shapes move and change. Based on that, it can predict weather patterns out to about eight hours as well or better than much more complex models.

More importantly, it can do this quickly and continuously. Because rather than saying, “seven hours from now I think this cloud in particular will be here,” its results are probabilistic, expressing that the cloud has a 70% chance of being here, a 20% chance of being within a mile of there, and a 10% chance of being a mile further. That may compute a bit more directly into the “40% chance of rain” we are all used to — who after all looks at a Doppler radar image in their weather app and thinks, “what a threatening formation”?

Given the practical applications of this technology, it seems likely that we’ll see it integrated with Google’s weather models in the future as a special sauce for near-term weather predictions.

Ozone layer recovery

It would be easy to miss this little nugget of good news, but it’s important to keep it in context. Scientists have observed changes to the Earth’s large-scale circulation trends and believe that they are the result of the ozone layer partly recovering from centuries of battery at the hands of growing industry. This doesn’t mean the climate is saved or anything — there are other serious problems that need tackling — but it’s nice to know that at the very least the Antarctic ozone layer appears to be getting better.

Facebook “must do better” in research partnerships

In an informative opinion piece, TU Munich political data science professor Simon Hegelich compares the half-hearted effort by Facebook to responsibly share access to its data with the approach it takes with paying advertisers.

Having received access to Facebook’s new data set for researchers himself for the purpose of studying disinformation campaigns, Hegelich says:

The data provided are nearly useless for answering this and many other research questions, and are far inferior to what Facebook gives private companies.

My opinion is that Facebook is working with university researchers mainly to gain positive news coverage and to reduce political pressure on the company.

He says that the data Facebook has provided to researchers is spoiled by shoddy anonymization measures while similar but far more detailed data is sold to advertisers — and that Social Science One, the “independent” organization set up to mediate the Facebook-academic connection, “is not truly independent.”

No doubt Facebook and companies like it are navigating new ground in these efforts, but that doesn’t mean they can’t be called to account for their shortcomings.