There’s more AI news out there than anyone can possibly keep up with. But you can stay tolerably up to date on the most interesting developments with this column, which collects AI and machine learning advancements from around the world and explains why they might be important to tech, startups or civilization.

Before we get to the research in this edition, however, here’s a study from the ITIF trade group evaluating the relative positions of the U.S., EU and China in the AI “race.” I put race in quotes because no one knows where we’re going or how long the track is — though it’s still worth checking who’s in front every once in a while.

The answer this year is the U.S., which is ahead largely due to private investment from large tech firms and venture capital. China is catching up in terms of money and published papers but still lags far behind and takes a hit for relying on U.S. silicon and infrastructure.

The EU is operating at a smaller scale, and making smaller gains, especially in the area of AI-based startup funding. Part of that is no doubt the inflated valuations of U.S. companies, but the trend is clear — and perhaps an opportunity for investors is as well, who might see this as an opportunity to get in on some high-quality startups without needing quite so much capital.

The full report (PDF) goes into much more detail, of course, if you’re interested in a more granular breakdown of these numbers.

If the authors had known about this new Amazon-funded AI research center at USC they probably would have pointed at it as a good example of the type of partnership that helps keep U.S. production of AI scholars up.

A touch of class

On the farthest possible end from monetization and practical application, we have two interesting uses of machine learning in fields where human expertise is valued in different ways.

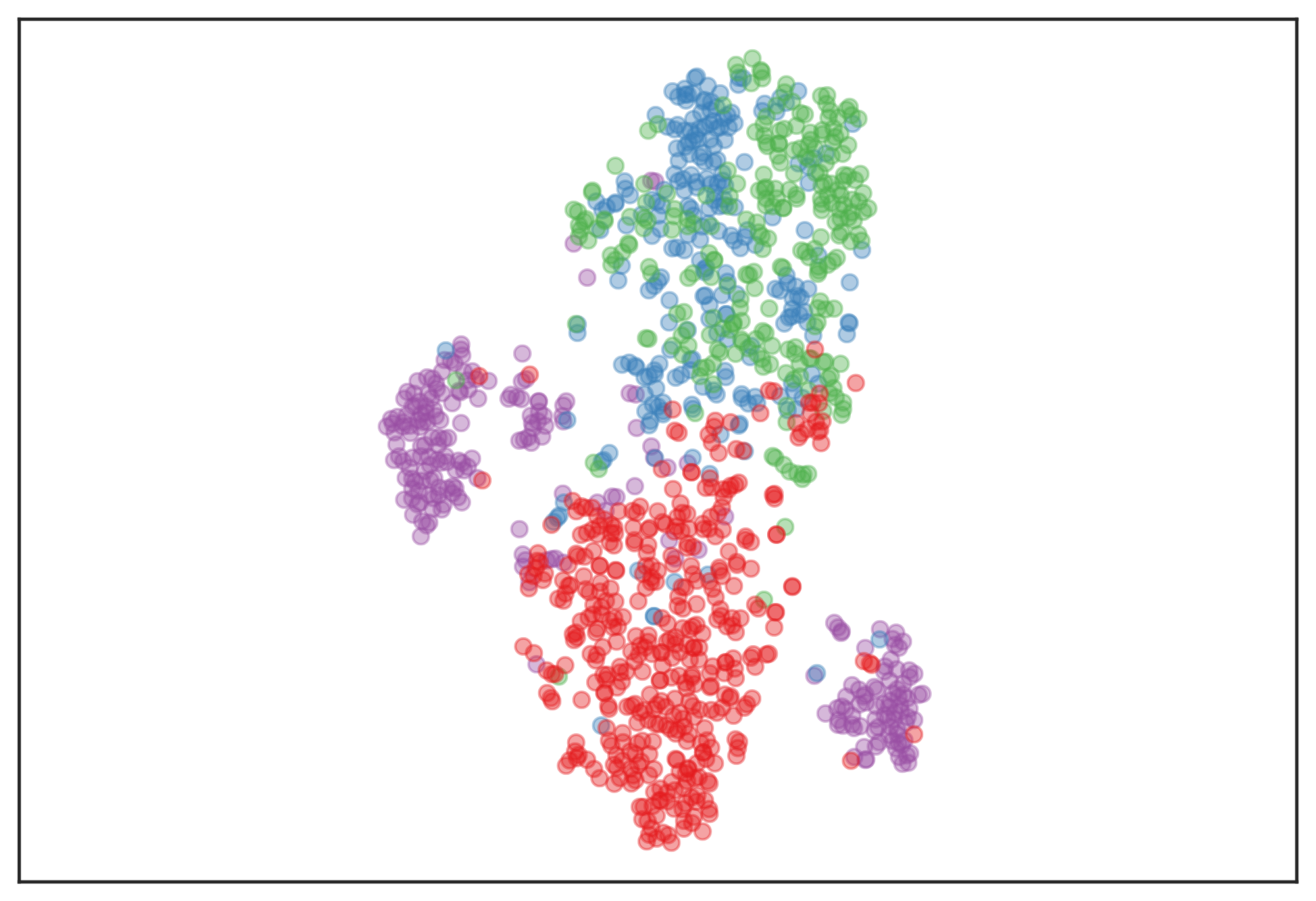

At Switzerland’s EPFL, some music-minded boffins at the Digital and Cognitive Musicology lab were investigating the shift in the use of modes in classical music over the ages — major, minor, other or none at all. In an effort to objectively categorize thousands of pieces from hundreds of years and composers, they created an unsupervised machine learning system to listen to and categorize the pieces according to mode. (Some of the data and methods are available on GitHub.)

“We already knew that in the Renaissance, for example, there were more than two modes. But for periods following the Classical era, the distinction between the modes blurs together. We wanted to see if we could nail down these differences more concretely,” he explained in a university news release.

The model ended up isolating several distinct clusters of mode usage, arrived at without any knowledge of music theory or history. However one chooses to interpret the results, it is a fascinating new method for evaluating the classical corpus.

Another cultural mainstay, chess, famously had its turning point in the famous battle between Garry Kasparov and Deep Blue.

But now the question is not how to make a stronger chess AI (it’s not necessary) but how to make one play more like a human. What was it about Kasparov that made him so good? As he explained in his book on the Deep Blue match, it certainly wasn’t raw computing power. Cornell researchers think that emulating human skill is a deeper and more interesting problem than overpowering it.

The idea was to create an AI that understood the game as a player of a certain level of skill did — and part of the approach was to teach it to focus on individual moves rather than overall victory. Knowing what moves people of various skill levels make and why could make for a very convincing opponent — and more helpful when it comes to teaching.

The resulting (work-in-progress) AI, Maia, is supposedly available to play against on lichess.org, but I’ll be damned if I can find it.

AI’s eagle eye

Medical imaging is an incredibly broad and diverse field, so it’s no surprise that practically every edition of this column has a new application of machine learning in it. But spotting schizophrenia seems far more difficult than spotting early signs of a tumor or cataract. Nevertheless, researchers at the University of Alberta think they’ve got something worth bringing into practice.

A computer-vision model trained on MRI imagery of schizophrenic patients was set loose on dozens of MRIs of close relatives — the illness is not exactly congenital, but risk seems to run higher in some families. The model “accurately identified the 14 individuals who scored highest on a self-reported schizotypal personality trait scale.”

This is good — and realistic. No one should be hoping for, let alone reporting, an AI that can diagnose schizophrenia based on brain imagery. But there are patterns in brain tissue that seem to correlate with various illnesses, though we may not know why — and AIs are excellent at pattern detection. That this one correctly pointed out the folks that seem to be running a higher risk as suggested by other metrics makes it potentially a very useful tool for psychiatrists and other medical personnel.

Spotting elephants may seem like an easier task, but … well, it is. Still, that doesn’t mean it’s easy, especially from orbit. High-resolution orbital imagery could be a gold mine for conservationists and ecologists, but there’s so much of it that automation is practically a requirement if you want to make it worth looking at at all.

For decades that sort of thing has been the province of grad students, research assistants and a pot of coffee, but as we’ve seen before, ML is great at picking out animals from the background, such as dugongs.

Tracking elephants can be difficult despite their great size, since they can cover so much distance and inhabit so many different biomes. But this team from Oxford and partners at Bath and ESA suggests that given a certain resolution now available, it’s possible that elephant positions could be updated as often as daily. It’s not quite as good as human labeling but as with other examples of this type of work, it’s more about reducing the workload than doing the whole thing.

Another crucial task that seems obvious to do from overhead is identifying trees. Forest management is often done by manually visiting the sites and surveying from the ground, or at best via small aircraft or drone.

Russia’s Skolkovo Institute of Science and Technology has demonstrated that with satellite data (and especially if it’s augmented by height data, perhaps from another orbital source like radar), they can identify a given area’s dominant species. The resulting contour maps should be very useful to forest ecologists, especially if tracked over time. Mixed forests still escape this model’s powers of understanding, but this is already a potentially very helpful piece of software.

Accessible icons

This last item came up while I was putting the above together. Google is working on a computer-vision system that can recognize and label dozens of common icons like forward, back, options, list view and so on.

Accessibility on mobile phones is a spotty proposition. Some apps and services have every icon, every button labeled, while others do the bare minimum or less. If the phone’s OS could fill in the gaps, that could be extremely useful for people with visual impairments. Sometimes it can by using information from the app’s code, but it would be helpful to just be able to look and say: That’s an undo button.

Google’s working on just that — a focused, fast convolutional neural network called IconNet that takes up very little space and will soon recognize over 70 icons in milliseconds with minimal processing overhead.

Sounds great, right? So it shouldn’t be a surprise that Apple shipped a feature quite like this one with iOS 14 in December.

Well, you can’t win them all. But in any case the winners are the users, who will have more accessibility options thanks to fierce competition in the AI space.